Building three-dimensional point clouds from high-resolution photos taken from unmanned aerial vehicles or drones may soon help plant breeders and agronomists save time and money compared with measuring crops manually.

Dr. Lonesome Malambo, postdoctoral research associate in the Texas A&M University ecosystem science and management department in College Station, recently published on this subject in the International Journal of Applied Earth Observation and Geoinformation.

He was joined on the study by Texas A&M AgriLife Research scientists Dr. Sorin Popescu, Dr. Seth Murray and Dr. Bill Rooney, their graduate students and others within the Texas A&M University System. Funding was provided by AgriLife Research, the U.S. Department of Agriculture National Institute of Food and Agriculture, Texas Corn Producers Board and United Sorghum Checkoff Program.

“What this multidisciplinary partnership has developed is transformative to corn and sorghum research, not just to replace our standard labor intensive height measurements, but to find new ways to measure how different varieties respond to stress at different times through the growing season,” Murray said. “This will help plant breeders identify higher yielding, more stress-resistant plants faster than ever possible before.”

Crop researchers and breeders need two types of data when determining what crop improvement selections to make: genetic and phenotypic, which are the physical characteristics of the plant, Malambo said.

Great strides have been made in genetics, he said, but there’s still much work to be done in measuring the physical traits of any crop in a timely and efficient manner. Currently, most measurements are taken from the ground by walking through fields and measuring.

Over the past few years, UAV photos have been tested to see what role they can play in helping determine characteristics such as plant height, which, measured over time, can help assess the influence of environmental conditions on plant performance.

Malambo said this study could be the first to use the concept of generating 3-D point clouds using “structure from motion,” or SfM, techniques over corn and sorghum throughout a growing season. These two crops were selected because they have a large variation in height and canopy over the season.

While SfM is not new, the technology has been historically under-evaluated for repeated plant height estimation in studies limited to a single date or short UAV campaigns, he said.

In agricultural environments where conditions change due to crop maturity, Malambo said the next logical step was to determine if the methods were consistent, repeatable and accurate over the growth cycle of crops.

He said the SfM technology uses overlapping images to reconstruct the 3-D view of a scene, going beyond the typical flat photos by enabling automated interior and exterior orientation calibration. Small reference targets were placed in fields before each flight.

When a photo is taken from the UAV, it is basically transferring a 3-D scene into 2-D, Malambo explained. SfM is trying to reverse this process utilizing properties such as geometry, properties of light and modeling.

“Once we recreate the scene, it looks the way it did when we captured it, multidimensional,” he said.

“In this study, we were interested in observing the whole growing cycle of these crops. We flew over the crops on 12 different dates and at the same time had people measure the growth on the ground on six of the dates.”

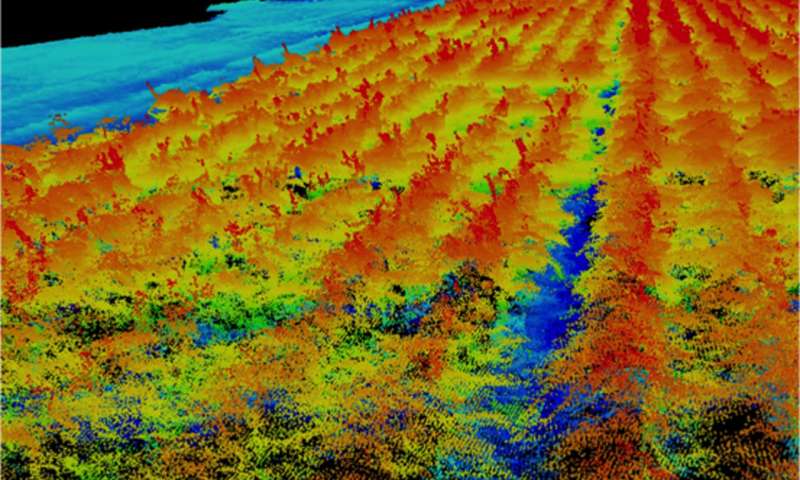

Popescu said on two of the dates, for the field measurements, a terrestrial laser scanning sensor, also known as lidar, was used to collect reference data for the plant canopy height.

“This is another unique aspect of our study,” he said. “To my knowledge, no other published study compared SfM point cloud measurements to lidar scanning, but only to manual field measurements of plant heights.

“The terrestrial lidar provides the most accurate measurements of the canopy, resulting in a point cloud of direct 3-D measurements,” Popescu said.

He said SfM provides reconstructed 3-D point clouds through photogrammetric methods, whereas lidar provides direct measurements using laser scanning. The terrestrial lidar sensor, or TLS, has a limited coverage and must be placed on tall vehicles to view the canopy from above.

“It is really not practical to use the TLS for plant height measurements, mostly only for validation studies like ours,” Popescu said. “Lidar can be placed on a UAV, but those sensors are very expensive. We are currently assembling one UAV lidar sensor and will have it operational by the end of this year.”

Malambo said physical measurements were taken from May through July, while flight photos were taken from April through August.

“We got a very good correlation from the measurements in the field and the images we were able to produce,” he said. “There is great potential to reduce the time and cost of collecting data with affordable technology that can be used by farmers and researchers.”

This improvement in image analyses has opened an avenue for more affordable non-metric cameras to be used on UAV platforms for reliable mapping and 3-D modeling than through expensive airborne and terrestrial laser scanning, Malambo said. SfM software is easy to learn, automated and readily available.

The system isn’t without challenges, though, he said. In the effort to see if it is accurate over time, Malambo said the technology depends on the quality of the images. With sorghum, which is mainly foliage, it did well. Corn, which dries up and loses contrast as it matures, tends to blend in with the ground.

“Our general conclusion is structure from motion offers great potential to work for measuring plant height, but we need to make it more robust through the growing season,” he said. “Changes in wind speed can affect the drone cameras in capturing images. And that, in turn, affects the results of the 3-D capabilities.”

Malambo said he is searching for ways to improve the overall program, including reducing the processing time. Data captured this past growing season is massive and takes several days to process.

One idea he discussed is working with other departments on campus to be able to have online real-time analysis of the field. The drone-captured image would be sent directly to a laptop computer where advanced data analysis methods like machine learning or deep learning could be used to help allow the height data to be available immediately.

Source: phys.org